The New Boomtowns: How AI Data Centers Are Rewiring Power, Water, and Local Politics

On a cold week in late January 2026, parts of the U.S. power grid did what it always does under stress: prices spiked, utilities scrambled, and everyone involved pointed to the same underlying reality. Demand is rising faster than the system was built to handle.

This time, the new demand is not just more houses or more factories. It is something stranger: warehouses full of computers that never sleep, built to train and run artificial intelligence.

AI data centers are easy to misunderstand because they look like ordinary industrial buildings. No smokestacks. Few employees. Minimal traffic once construction ends. But behind the walls are thousands of power-hungry chips, packed into racks that run hot enough that cooling becomes a second industry inside the first. The “cloud” turns out to be physical, local, and increasingly difficult to hide from people who pay electric bills and live near the water supply.

What an AI data center actually is

A traditional data center stores and serves data: websites, photos, business software, streaming, and cloud computing. An AI data center does that too, but it is also a specialized factory for computation. The big difference is density.

AI workloads, especially training and large-scale inference, push far more electricity through the same square footage. That electricity becomes heat, and heat must be removed quickly to keep hardware from failing. So the facility’s real outputs are: computation, heat, and a growing set of demands on local infrastructure.

This is why communities are suddenly arguing about things that used to feel abstract, like “capacity auctions,” water permits, and transmission interconnection queues.

The scale, in plain numbers

In 2024, U.S. data centers consumed an estimated 183 terawatt-hours of electricity, a little over 4% of total U.S. electricity consumption, according to International Energy Agency estimates summarized by Pew Research. Pew also notes projections rising to 426 TWh by 2030 under those assumptions.

The U.S. Department of Energy and Lawrence Berkeley National Laboratory, in a 2024 report to Congress, describe data center load growth as having tripled over the past decade and projecting that demand could double or triple by 2028.

Other forecasts vary. EPRI has estimated data centers could consume as much as 9% of U.S. electricity generation by 2030 in high-growth scenarios.

The disagreement is not trivial. It is the whole story. The future hinges on how fast AI demand grows, how efficient chips and models become, and whether grid infrastructure expands fast enough to connect new generation and new data center campuses.

Why the grid is feeling it now

If you want the simplest explanation for the current friction, it is this: power systems were not designed for multiple new loads that look like small cities showing up on short timelines.

Data centers do not just add demand. They add demand in specific places, at specific times, often clustered together. Northern Virginia’s “Data Center Alley” has become the archetype, but similar clusters are emerging elsewhere because companies chase fiber routes, tax incentives, cheap land, and available transmission.

That clustering is why grid stress shows up as regional drama before it shows up as a national statistic.

Recent reporting captured that dynamic in real time: a winter storm tested strained grids, with wholesale prices spiking in regions already wrestling with data center growth. And the Financial Times noted that while AI was not the main driver of rising U.S. electricity bills yet, data centers were already influencing costs in places like PJM, where planners are forecasting sharper load growth and pricing it into capacity markets.

The “build gas plants for AI” response

When utilities and developers cannot get enough clean generation and transmission built quickly, they reach for what they know: gas.

On January 29, 2026, both Wired and The Guardian highlighted a striking claim tied to a Global Energy Monitor analysis: a large share of proposed new gas capacity is being justified, directly or indirectly, by AI data center demand, with a rapid expansion in projects in the pipeline.

It is worth pausing here, because this is where the AI data center story turns into an energy story, and then into a climate story, and then into a political story.

Gas plants can be built faster than many other resources, and they can provide firm power. But they also risk locking in decades of emissions and infrastructure costs. Even if some proposals never get built, partial build-outs can still shape rates, local air quality debates, and long-term planning.

Meanwhile, the Department of Energy itself has emphasized that AI data center deployment is a significant factor in near-term electricity demand growth, and it is actively framing the question as: how do you meet this demand with clean resources without destabilizing reliability?

The water side of the story, and why it is getting louder

Electricity is the headline. Water is the pressure point that makes it personal.

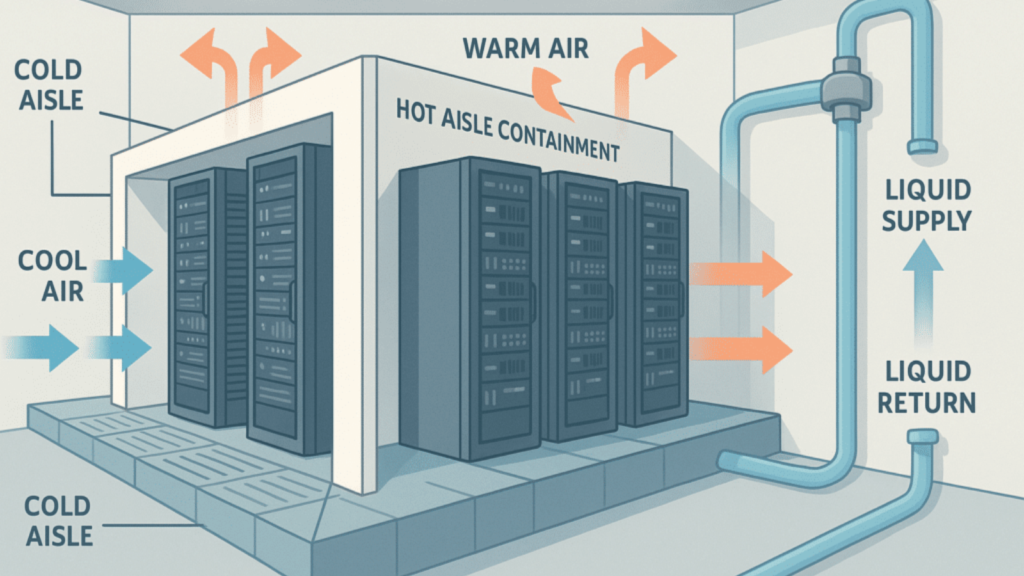

Cooling is not optional. And in many designs, the cheapest way to cool at scale is to evaporate water.

EESI, a nonpartisan policy group, has summarized estimates that large AI data centers can consume up to 5 million gallons per day, and that a medium-sized facility can consume on the order of tens to hundreds of millions of gallons per year, depending on design and climate.

The Environmental Law Institute has pointed to research estimating direct U.S. data center water consumption rising substantially over the last decade, and it highlights how AI training can have a meaningful water footprint depending on where and how it runs.

The key nuance, often missed in public arguments, is the tradeoff: you can reduce water use by relying more on electricity-intensive cooling, but that can raise power demand and emissions depending on the grid mix. An Undark explainer put it bluntly: water and electricity are often a seesaw, and the “best” choice depends on local conditions.

This is why water fights tend to erupt in specific places. An AI data center’s impact is not a national average. It is a local constraint meeting a global industry.

Why communities keep saying: “We didn’t sign up for this”

From a town’s perspective, the bargain can feel asymmetric.

An AI data center brings construction jobs, some tax base, and indirect economic activity. But it can also bring:

- new transmission lines and substations

- higher local electricity system costs

- heavy water use in drought-prone basins

- diesel backup generators that raise air-quality concerns

- land-use changes that crowd out housing or other development

And because these facilities can be relatively quiet once built, residents sometimes feel the pain (rates, infrastructure, water) without seeing daily evidence of the benefit.

That is why the political fights often center on “who pays.”

If a utility upgrades the grid to serve a cluster of giant new loads, are those costs socialized across all ratepayers, or targeted more directly at the new customers? Policymakers are now actively exploring those questions, including structures that shift more costs onto large power users.

The biggest misconception: “AI is the only reason demand is rising”

AI is a major accelerant, but it is not the entire story.

The IEA has argued that even with strong growth, AI data center demand growth is still a minority share of global electricity demand growth drivers through 2030, alongside electrification, industrial output growth, EVs, and air conditioning.

That matters because it reframes the question. The problem is not only “AI is coming.” It is “everything is becoming electric,” and AI data centers are arriving in the same decade.

What can actually make this less painful

There is no single fix, but there are levers that change the outcome.

Build and connect more clean power faster.

The constraint is increasingly interconnection and transmission. If clean generation cannot get connected quickly, gas becomes the default bridge.

Treat AI data centers like flexible industrial loads.

Some loads can be shifted in time. Demand response, onsite storage, and contracts that reward flexibility can reduce peak stress. Recent reporting suggests AI operators have been slower than some other large loads (like certain crypto facilities) to embrace that bargain at scale.

Use transparency as infrastructure.

Communities negotiate better when they know expected peak load, annual consumption, water source, cooling method, backup generator profile, and what the operator will do during grid emergencies.

Choose cooling based on local reality, not global habit.

In water-stressed regions, low-water cooling designs may be worth higher electricity costs. In power-constrained regions with abundant water, the calculus can flip.

Stop pretending the heat is waste.

Waste-heat reuse is not always easy, but in colder climates it can be meaningful if planned early.

The bottom line

AI data centers are not a futuristic concept. They are a present-day land rush for electricity, water, and permits.

Nationally, they still represent a modest share of total electricity demand, but their growth rate and geographic clustering are large enough to reshape local grids, drive new generation decisions, and trigger real political fights over costs and resources.

The decisive question is not whether AI data centers will expand. They will.

The question is whether the build-out forces a fossil-heavy power response and water conflict by default, or whether utilities, regulators, and the companies building these facilities treat energy, water, and flexibility as core design constraints rather than public-relations afterthoughts.